Difference between revisions of "Missing PIDs and Hashing"

(→Identifiers and Id Problems) |

|||

| Line 1: | Line 1: | ||

===Identifiers and Id Problems=== | ===Identifiers and Id Problems=== | ||

| − | All records within the MDR system are identified by using Id fields, with relationships between records represented by the use of some of those Id fields as foreign keys. Within the sd and ad tables in the 'source' databases (i.e. the ones that hold the data for a single data source), all tables are constructed with an integer identity key, that is generated automatically by the system in ascending sequence. In addition study tables have a 'source data study identifier' field: '''sd_sid''', and data object tables have a 'source data object identifier field: '''sd_oid''', as shown in the table creation code below. | + | All records within the MDR system are identified by using Id fields, with relationships between records represented by the use of some of those Id fields as foreign keys. Within the sd and ad tables in the 'source' databases (i.e. the ones that hold the data for a single data source), all tables are constructed with an integer identity key, that is generated automatically by the system in ascending sequence. In addition study tables have a 'source data study identifier' field: '''sd_sid''', and data object tables have a 'source data object identifier field: '''sd_oid''', as shown in the excerpts from the table creation code below. |

<div style="font-family: monospace; font-size: 13px"> | <div style="font-family: monospace; font-size: 13px"> | ||

<pre> | <pre> | ||

| Line 36: | Line 36: | ||

</div> | </div> | ||

The integer Id fields are '''not''' persistent (for example in the sd schemas they are destroyed and recreated during each harvest) and are not designed to be used outside of their immediate context. They are there as part of normal database 'good practice', to allow records to be uniquely identified, e.g. for editing in the pgAdmin table browser, and play little direct part ion the procersses within the MDR. The '''sd_sid''' and '''sd_oid''' fields, however, are crucial. These are derived from the source data in fixed ways, and can usually be regarded as persistent, though there are a few problems with their derivation, as described below. They are used, as foreign keys, to bind both attributes and data objects to the 'source' study, as well as data object attributes to the object itself. sd_sids and sd_oids are unique within the studies or data objects tables '''in any source database''', but they cannot be guaranteed to be unique across the whole of the MDR, even though in practice they often are. To uniquely identify a study or object across the MDR the sd_sid or sd_oid must be combined with the source id (a 6 digit identifier). In the individual source databases the addition of the source id is not required, but in the mdr database itself, that contains data from all sources, the combination of source id and sd_sid / sd_oid is neeeded.<br/> | The integer Id fields are '''not''' persistent (for example in the sd schemas they are destroyed and recreated during each harvest) and are not designed to be used outside of their immediate context. They are there as part of normal database 'good practice', to allow records to be uniquely identified, e.g. for editing in the pgAdmin table browser, and play little direct part ion the procersses within the MDR. The '''sd_sid''' and '''sd_oid''' fields, however, are crucial. These are derived from the source data in fixed ways, and can usually be regarded as persistent, though there are a few problems with their derivation, as described below. They are used, as foreign keys, to bind both attributes and data objects to the 'source' study, as well as data object attributes to the object itself. sd_sids and sd_oids are unique within the studies or data objects tables '''in any source database''', but they cannot be guaranteed to be unique across the whole of the MDR, even though in practice they often are. To uniquely identify a study or object across the MDR the sd_sid or sd_oid must be combined with the source id (a 6 digit identifier). In the individual source databases the addition of the source id is not required, but in the mdr database itself, that contains data from all sources, the combination of source id and sd_sid / sd_oid is neeeded.<br/> | ||

| − | In the mdr database, where all data is aggregated, the studies and data objects do receive persistent, unique integer Ids, which can be interpreted as 'ECRIN Accession Ids'. These can be equated to one or more sd_sids or sd_oids because studies, and objects, may be present in one or more sources. | + | In the mdr database, where all data is aggregated, the studies and data objects do receive persistent, unique integer Ids, which can be interpreted as 'ECRIN Accession Ids'. These can be equated to one or more sd_sids or sd_oids because studies, and objects, may be present in one or more sources. The mdr database includes tables that store the relationship between the source data identifiers and the matching ECRIN Ids. |

<div style="font-family: monospace; font-size: 13px"> | <div style="font-family: monospace; font-size: 13px"> | ||

<pre> | <pre> | ||

| − | + | CREATE TABLE core.studies( | |

id INT NOT NULL PRIMARY KEY | id INT NOT NULL PRIMARY KEY | ||

, display_title VARCHAR NULL | , display_title VARCHAR NULL | ||

| Line 46: | Line 46: | ||

, ... | , ... | ||

| − | + | CREATE TABLE core.study_identifiers( | |

id INT NOT NULL PRIMARY KEY | id INT NOT NULL PRIMARY KEY | ||

, study_id INT NOT NULL | , study_id INT NOT NULL | ||

| Line 53: | Line 53: | ||

, ... | , ... | ||

| − | + | CREATE TABLE core.data_objects( | |

id INT NOT NULL PRIMARY KEY | id INT NOT NULL PRIMARY KEY | ||

, display_title VARCHAR NULL | , display_title VARCHAR NULL | ||

| Line 59: | Line 59: | ||

, ... | , ... | ||

| − | + | CREATE TABLE core.object_dates( | |

id INT NOT NULL PRIMARY KEY | id INT NOT NULL PRIMARY KEY | ||

, object_id INT NOT NULL | , object_id INT NOT NULL | ||

| Line 67: | Line 67: | ||

</pre> | </pre> | ||

</div> | </div> | ||

| − | + | Note that in the core schema, study and object attributes are grouped with the main study or object by using the study_id or object_id fields, which are the same as the id fields in the study and data object tables respectively. This is similar to the use of sd_sid and sd_oid in the source databases. The linke between studies and data objects, however, which in the source database uses sd_sid as a foreign key, no longer exists in the main mdr database. This is because here the links between studies and objects is many to many, whereas in the source databases it is always one-to-mnany. The core schema therefore requires a join table ('''study_object_links''') to resolve the relationship. The way in which source data ids are matched to one another is described more fully in '''[[Study-study links]]''', whilst the aggregation procxess, including the way in which the persistent ECRIN Ids are created, is described in '''[[Aggregating Data]]'''. | |

| + | Although this scheme works reasonably well there are three Id related problems that need to be resolved<br/> | ||

a) the lack of globally consistent or guaranteed identifiers for studies <br/> | a) the lack of globally consistent or guaranteed identifiers for studies <br/> | ||

b) the lack of simple persistent identifiers (PIDs) for most data objects, and <br/> | b) the lack of simple persistent identifiers (PIDs) for most data objects, and <br/> | ||

c) the lack of any identifiers for both study and object attributes.<br/> | c) the lack of any identifiers for both study and object attributes.<br/> | ||

| − | + | ||

| − | |||

====The Study Ids Problem==== | ====The Study Ids Problem==== | ||

Most studies in the | Most studies in the | ||

Revision as of 14:20, 19 May 2022

Contents

Identifiers and Id Problems

All records within the MDR system are identified by using Id fields, with relationships between records represented by the use of some of those Id fields as foreign keys. Within the sd and ad tables in the 'source' databases (i.e. the ones that hold the data for a single data source), all tables are constructed with an integer identity key, that is generated automatically by the system in ascending sequence. In addition study tables have a 'source data study identifier' field: sd_sid, and data object tables have a 'source data object identifier field: sd_oid, as shown in the excerpts from the table creation code below.

CREATE TABLE sd.studies(

id INT GENERATED ALWAYS AS IDENTITY PRIMARY KEY

, sd_sid VARCHAR NOT NULL

, display_title VARCHAR NULL

, title_lang_code VARCHAR NULL default 'en'

, brief_description VARCHAR NULL

, ...

, ...

CREATE TABLE ad.study_identifiers(

id INT GENERATED ALWAYS AS IDENTITY PRIMARY KEY

, sd_sid VARCHAR NOT NULL

, identifier_value VARCHAR NULL

, ...

, ...

CREATE TABLE ad.data_objects(

id INT GENERATED ALWAYS AS IDENTITY PRIMARY KEY

, sd_oid VARCHAR NOT NULL

, sd_sid VARCHAR NULL

, title VARCHAR NULL

, ...

, ...

CREATE TABLE ad.object_dates(

id INT GENERATED ALWAYS AS IDENTITY PRIMARY KEY

, sd_oid VARCHAR NULL

, date_type_id INT NULL

, ...

, ...

The integer Id fields are not persistent (for example in the sd schemas they are destroyed and recreated during each harvest) and are not designed to be used outside of their immediate context. They are there as part of normal database 'good practice', to allow records to be uniquely identified, e.g. for editing in the pgAdmin table browser, and play little direct part ion the procersses within the MDR. The sd_sid and sd_oid fields, however, are crucial. These are derived from the source data in fixed ways, and can usually be regarded as persistent, though there are a few problems with their derivation, as described below. They are used, as foreign keys, to bind both attributes and data objects to the 'source' study, as well as data object attributes to the object itself. sd_sids and sd_oids are unique within the studies or data objects tables in any source database, but they cannot be guaranteed to be unique across the whole of the MDR, even though in practice they often are. To uniquely identify a study or object across the MDR the sd_sid or sd_oid must be combined with the source id (a 6 digit identifier). In the individual source databases the addition of the source id is not required, but in the mdr database itself, that contains data from all sources, the combination of source id and sd_sid / sd_oid is neeeded.

In the mdr database, where all data is aggregated, the studies and data objects do receive persistent, unique integer Ids, which can be interpreted as 'ECRIN Accession Ids'. These can be equated to one or more sd_sids or sd_oids because studies, and objects, may be present in one or more sources. The mdr database includes tables that store the relationship between the source data identifiers and the matching ECRIN Ids.

CREATE TABLE core.studies(

id INT NOT NULL PRIMARY KEY

, display_title VARCHAR NULL

, title_lang_code VARCHAR NULL

, ...

, ...

CREATE TABLE core.study_identifiers(

id INT NOT NULL PRIMARY KEY

, study_id INT NOT NULL

, identifier_value VARCHAR NULL

, ...

, ...

CREATE TABLE core.data_objects(

id INT NOT NULL PRIMARY KEY

, display_title VARCHAR NULL

, ...

, ...

CREATE TABLE core.object_dates(

id INT NOT NULL PRIMARY KEY

, object_id INT NOT NULL

, date_type_id INT NULL

, ...

, ...

Note that in the core schema, study and object attributes are grouped with the main study or object by using the study_id or object_id fields, which are the same as the id fields in the study and data object tables respectively. This is similar to the use of sd_sid and sd_oid in the source databases. The linke between studies and data objects, however, which in the source database uses sd_sid as a foreign key, no longer exists in the main mdr database. This is because here the links between studies and objects is many to many, whereas in the source databases it is always one-to-mnany. The core schema therefore requires a join table (study_object_links) to resolve the relationship. The way in which source data ids are matched to one another is described more fully in Study-study links, whilst the aggregation procxess, including the way in which the persistent ECRIN Ids are created, is described in Aggregating Data.

Although this scheme works reasonably well there are three Id related problems that need to be resolved

a) the lack of globally consistent or guaranteed identifiers for studies

b) the lack of simple persistent identifiers (PIDs) for most data objects, and

c) the lack of any identifiers for both study and object attributes.

The Study Ids Problem

Most studies in the

The Data Object Ids Problem

Most but not all data objects in the system have an associated URL, but that link cannot be guaranteed to be permanent. It is also true that most (but not all) journal articles have a Pubmed id and / or a doi, but journal articles are handled by the system differently from how most other data objects are processed. They are linked to studies after extraction, while most other objects are linked, if only implicitly, to studies in the sources (trial registries, data repositories) where they are found during extraction. Although the object type could be used - in most cases - to distinguish the objects themselves, and so match objects when importing and comparing data, the type cannot be absolutely guaranteed to be unique, for a particular study, and a composite (parent study + object type) identifier mechanism would be a relatively clumsy two-field mechanism for identifying objects. The need is for a relatively simple mechanism for generating data object identifiers that can be guaranteed to be unique and persistent, or at least as persistent as possible.

The Study / Object Attribute Ids Problem

Study and object attributes - identifiers, titles, instances, dates, topics etc. - have no associated intrinsic identifiers, and here the problem is worse. The problem, especially when web scraping is used, is that there is no guarantee that attributes will be processed in the same order as before, or will be present in the same numbers, which means comparing one unordered set of records with another to identify the new, edited and (more rarely but not impossible) deleted items.

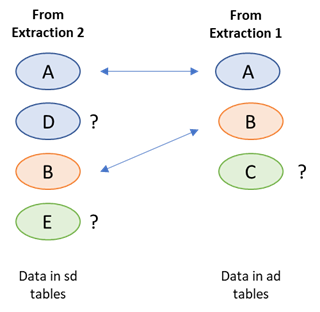

Imagine a study with 3 identifier attributes a, b and c. a is a registry identifier and b and c are sponsor ids. On the next download a funder identifier entry ‘d’ is found, which is the second in the list, while at the same time identifer c has been edited, to become 'e' – the list is now a, d, b and e. The two sets of study identifiers can be compared and a match can be found between the two versions of a and b. But d and e are new to the system and c can no longer be matched. One of d or e corresponds to the pre-edited version of c (see figure 1), but which one?

Records which are identical in each set can be matched, but for objects that are not matched

it is impossible, in general, to determine their origin or relationship with objects in the previous set.

A human could look at the data and, in most cases, seeing that c and e are ‘similar’ can deduce that c has been edited into e, and d is completely new. But a machine cannot do that without a complex scoring / weighting algorithm, that would need to take into account every field in the record, and even then could only make an estimate rather than being 100% certain (the more extensive the editing, the closer the record that results looks like a completely new addition). The result is that the system has to

a) Mark both d and e as new attributes and add them both to the system

b) Mark c as no longer relevant, and ‘retire’ it from the system.

In other words edits have to be handled explicitly as a combination of insert and delete.

It is true that for some types of attribute sets (e.g. object dates), you could make assumptions about the data and say, for example, that a data object would only ever have one date of a certain type. But this mechanism is only possible for some types of attribute (for example studies may have multiple identifiers of the same type) and assumptions about uniqueness of type are dangerous to make with very large data sets, often with poorly managed data. The need is therefore to find an efficient method for identifying changes in sets of attributes, and for actioning the changes that are required.

Using hashes for Object PIDs

The object PID problem, for objects in study based data sources can be solved by hashing together, using the common MD5 hashing algorithm, a few key fields of the data object, with those fields representing a unique combination of values. The fields selected are

- the source data identifier of the 'parent' study - usually a trial registry id. Because different registries use different formats and prefixes for their ids these can, at the moment, be guaranteed to be globally unique - though the requirement is only that they are unique within a single source.

- the display title of the object. Very few data objects have explicit titles, and even when they do exist it is not possible to guarantee that they are unique. The approach to creating an object's display title is therefore to concatenate the study title (the short, public title) with the object's name or, more normally, its type, with ' :: ' used to separate the two components. Where more than one object of the same type exists, linked to the same study - which is relatively rare but which can occur, for example, if different versions of a protocol have been uploaded, or multiple datasets are made available, it is necessary to use some mechanism - often by using a file name - to ensure that the display name remains unique. The display titles are created as part of the initial data harvesting, so that process needs to include the necessary code to ensure title uniqueness. As an extra check, if data objects are added to an existing study from a different source, because they 'belong' to the same study listed in another registry or repository (with the exception of journal papers and registry entries) they are not added if they have the same display title as an existing object.

For example, during the harvesting of XML files from Clinicaltrials.gov, one possible linked data object is a registry based results summary. The system first creates the object's display title using the study title (title_base) and the object type, and then hashes this together with the study id (sid):

object_display_title = title_base + " :: CTG Results entry";

sd_oid = HashHelpers.CreateMD5(sid + object_display_title);

While the CreateMD5 function itself (a static helper function) is:

public static string CreateMD5(string input)

{

// Use input string to calculate MD5 hash

using (MD5 md5 = MD5.Create())

{

byte[] inputBytes = System.Text.Encoding.ASCII.GetBytes(input);

byte[] hashBytes = md5.ComputeHash(inputBytes);

// return as base64 string

// 16 bytes = (5*4) characters + XX==,

// 24 rather than 32 hex characters

return Convert.ToBase64String(hashBytes);

}

}

Note that while the MD5 function itself returns 32 hex characters the functions shortens this a little to 24 characters (the last always being '==' becasue only 22 are actually required), as a base 64 string. The function therefore produces a relatively short single-field identifier for all study-linked data objects, which for all practical purposes is unique.

Using Hashes for comparison I

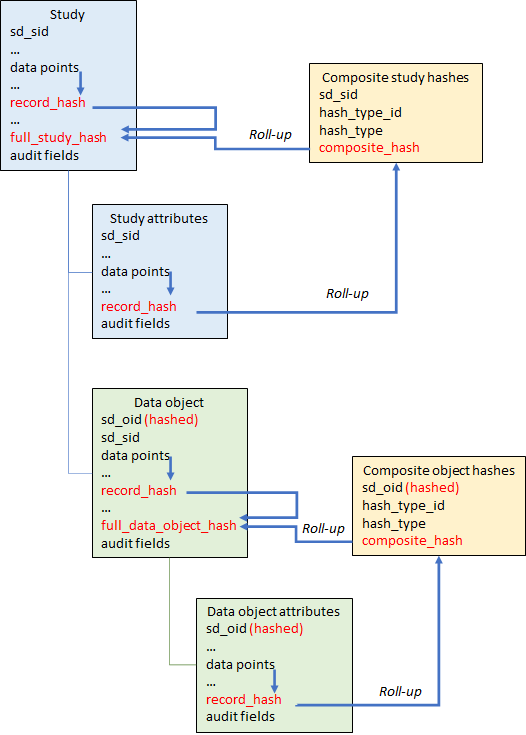

Hashes can also be used to efficently compare both the main entities (studies and data objects), and study and object attributes, between successive data imports. It is first necessary to be clear about what ‘comparing’ two records means, and how equality (or its converse, an edit) of records is defined. The system could compare every field in each record, but in fact only some fields are relevant to the record’s 'essential content' and need to be included in the comparison. Id fields, whether from the source data or generated as described above, a foreign id that link an attribute record to its parent entity, and audit fields that date the record, do not need to be compared, just the fields that make up the record's 'data payload' (see figure 2). Because the ad records only have the codes for categorised questions, and not the text decode (whereas the sd equivalent tables have both), only the code needs to be included for comparison purposes for these question types.

If an id is not available or not constant, then the payload fields need to be used to identify and match the record.

Rather than comparing each field in turn, however, a process that would be different for each record type, it is easier to first ‘roll up’ the relevant values into a single string and then compare only that field. If the values are equal the records are equal, in the sense that their content-containing fields are the same. If they are unequal there is a difference in one or more of those fields, and the new record needs to replace the old. The easiest way of ‘rolling up’ a set of values is to create a hash value from those values. Each table in the sd and ad schemas therefore includes a hash_record field. Again using the md5 algorithm, a string of 32 hex characters (=128 bits) is created whatever the length of the source string. The hash value is almost certain to be unique – there are 2^128 or 3.4 X 10^38 possible values. Using the text itself could result in very long strings that would take much longer to compare.

This process is most easily carried out in postgres itself, first using the json_build_array function to turn a subset of field values in a record into a json array, then casting that array into a string, and then hashing that string using the built in md5 function. For instance, to produce a hash for the study identifiers records and store it in a field called record_hash, the command below can be used:

public void create_study_identifier_hashes()

{

string sql_string = @"Update sd.study_identifiers

set record_hash = md5(json_build_array(identifier_value, identifier_type_id, identifier_org_id,

identifier_org, identifier_date, identifier_link)::varchar)";

h.ExecuteHashSQL(sql_string, "study_identifiers");

}

This provides a very simple way of creating a summary record_hash field for every record in the sd tables, later to be transferred into the ad tables. The main difficulty is that hashing is computationally expensive and with very large tables the system sometimes 'times out'. Along with the need to provide some periodic feedback to the calling program and / or user, it is therefore useful to split up the hashing process. Hence the call to ExecuteHashSQL. This function - for very large tables - splits the records to be hashed up into batches of 500,000, and constructs the freedback message(s) accordingly. It does so by adding an additional where condition to the sql string passed into it. The function is shown below...

public void ExecuteHashSQL(string sql_string, string table_name)

{

try

{

int rec_count = GetRecordCount(table_name);

int rec_batch = 500000;

// int rec_batch = 10000; // for testing

if (rec_count > rec_batch)

{

for (int r = 1; r <= rec_count; r += rec_batch)

{

string batch_sql_string = sql_string + " where id >= " + r.ToString() + " and id < " + (r + rec_batch).ToString();

ExecuteSQL(batch_sql_string);

string feedback = "Creating " + table_name + " hash codes, " + r.ToString() + " to ";

feedback += (r + rec_batch < rec_count) ? (r + rec_batch - 1).ToString() : rec_count.ToString();

StringHelpers.SendFeedback(feedback);

}

}

else

{

ExecuteSQL(sql_string);

StringHelpers.SendFeedback("Creating " + table_name + " hash codes - as a single batch");

}

}

catch (Exception e)

{

string res = e.Message;

StringHelpers.SendError("In hash creation (" + table_name + "): " + res);

}

}

Using Hashes for comparison II

In addition, for a group of related records, the hash values can themselves be rolled up to form a hash that corresponds to the total content of that group. This allows a group of records to be easily compared. For example, to create a hash for the whole group of study identifiers listed for each study, the following code can be used (sd_sid is the source data study id, usually the registry id, the hash_type_id and hash type are 11 and 'identifiers' respectively, in this instance, and the table_name would be 'study_identifiers'):

public void create_composite_study_hashes(int hash_type_id, string hash_type, string table_name)

{

string top_sql_string = @"Insert into sd.study_hashes

(sd_sid, hash_type_id, hash_type, composite_hash)

select t.sd_sid, " + hash_type_id.ToString() + ", '" + hash_type + @"',

md5(to_json(array_agg(t.record_hash ORDER BY t.record_hash))::varchar)

from sd." + table_name;

h.CreateCompositeStudyHashes(top_sql_string, hash_type_id, hash_type);

}

This function can be called for all study attribute types by changing the parameters, and a similar function exists for data object attribute tables. Again it is necessary to split up very large tables because the process is time consuming - the CreateCompositeStudyHashes function is shown below, and again uses the ids in the source to split up records into batches (this time of only 10,000) where necessary.

public void CreateCompositeStudyHashes(string top_sql_string, int hash_type_id, string hash_type)

{

try

{

int rec_count = GetRecordCount("studies");

int rec_batch = 10000;

//int rec_batch = 1000; // for testing

if (rec_count > rec_batch)

{

for (int r = 1; r <= rec_count; r += rec_batch)

{

string where_sql_string = " where s.id >= " + r.ToString() + " and s.id < " + (r + rec_batch).ToString();

string batch_sql_string = top_sql_string + @" t

inner join sd.studies s

on s.sd_sid = t.sd_sid

" + where_sql_string + " group by t.sd_sid;";

ExecuteSQL(batch_sql_string);

string feedback = "Creating composite study hash codes (" + hash_type + "), " + r.ToString() + " to ";

feedback += (r + rec_batch < rec_count) ? (r + rec_batch - 1).ToString() : rec_count.ToString();

StringHelpers.SendFeedback(feedback);

}

}

else

{

string sql_string = top_sql_string + @" t group by t.sd_sid;";

ExecuteSQL(sql_string);

StringHelpers.SendFeedback("Creating composite study hash codes (" + hash_type + ") as a single batch");

}

}

catch (Exception e)

{

string res = e.Message;

StringHelpers.SendError("In study composite hash creation: " + res);

}

}

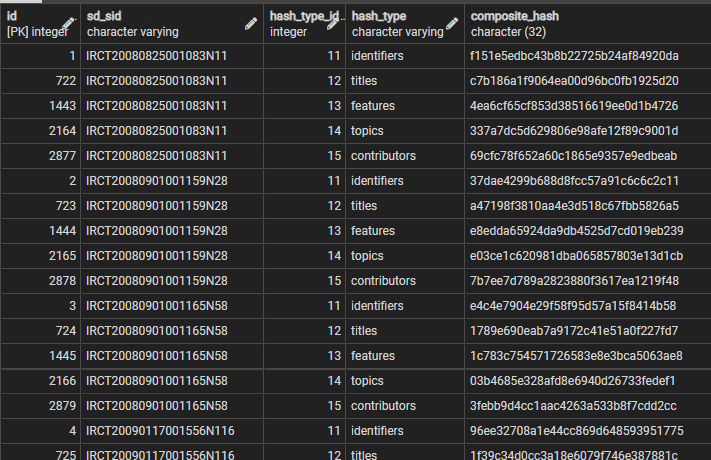

The table that results is illustarated below, for the first few studies listed in the Iranian Trial Registry

With this hash it is now possible to use a single comparison to see if any of the identifier data for a study has changed. The same is true of all other attributes, for studies and data objects. The process can be extended once more, to roll up all of the composite hashes for each study or data object, plus the record hash for the study or data object record itself, to produce a 'full_hash' for the whole entity. Comparing these full hashes allows an edit in any component of the study or data object data to be detected, because the full hash only remains the same if no changes at all have taken place in the 'essential data' carried by each record. If the full hashes are different, then the entity record hashes can be compared, and then the attribute composite hashes, to see where the editg(s) have occurred. This multiple hash roll-up process is shown in figure 4.

– but hashes are also constructed for whole records and used to detect changes in those records, and groups of related records, and finally in the entity (study or data object) as a whole.

In summary, at the end of each data extraction for each source, the system

- Creates a hash equivalent of the (non-id, non-audit) content of all study, study attribute, data object and data object attribute records, in all tables. In all cases this hash is stored in a field called ‘record_hash'.

- Creates a hash for each group of multiple attributes, e.g. for each study – a hash representing all identifiers, a hash for all titles, a hash for all study features, etc. For each data object: a hash for all titles, a hash for all instances, a hash for all dates, etc.

- The ‘composite hashes’ are stored in separate tables (one for study hashes, the other for data object hashes) that reference the parent study or data object, indicate the type of composite hash, and the hash value itself.

- The composite hash values for each study are rolled up with the hash for the study record itself to form a composite hash for the study and all of its components, a ‘study full hash’. It is stored in the Studies table.

- Similarly, the composite hash values for each data object are rolled up with the hash for the data object itself to form a composite hash for the data object and all of its components, a ‘data object full hash’. It is stored in the data objects table.

All of the hashes need to be transferred with the data records from the sd (session data) tables to the ad (accumulated data) tables whenever that transfer takes place, i.e. after the comparison process has identified the new or revised data. The details of the way in which these has values are compared and used in the import process is described in more detail on the Importing Data page