Difference between revisions of "Backing up the data"

(→Restoring the Database Data) |

(→Restoring the Database Data) |

||

| Line 253: | Line 253: | ||

* The folder holding psql and other Postgres exes (e.g. pg_dump, pg_restore) should be in the PATH variable, for system environment variables (though Set-Location can be used in the script to get round this, by changing the PowerShell working directory to the relevant folder) | * The folder holding psql and other Postgres exes (e.g. pg_dump, pg_restore) should be in the PATH variable, for system environment variables (though Set-Location can be used in the script to get round this, by changing the PowerShell working directory to the relevant folder) | ||

* A pgpass.conf file needs to be established in the user AppData\Roaming\postgresql\ folder (see notes in script). This allows the password to be supplied automatically. Each line in this text file must be of the form hostname:port:database:username:password, but a '''*''' may be used to provide a wild card, e.g. localhost:5432:*:postgres:password_string | * A pgpass.conf file needs to be established in the user AppData\Roaming\postgresql\ folder (see notes in script). This allows the password to be supplied automatically. Each line in this text file must be of the form hostname:port:database:username:password, but a '''*''' may be used to provide a wild card, e.g. localhost:5432:*:postgres:password_string | ||

| − | * PowerShell security needs to be set up to allow trhe script to run. By default unsigned scripts will not run (and can fail silently or with a misleading error message). A typical command would be '''Set-ExecutionPolicy -ExecutionPolicy Undefined -Scope CurrentUser''' | + | * PowerShell security needs to be set up to allow trhe script to run. By default unsigned scripts will not run (and can fail silently or with a misleading error message). A typical command would be '''Set-ExecutionPolicy -ExecutionPolicy Undefined -Scope CurrentUser''' (or CurrentMachine). Such commands will require the PowerShell terminal to be run as an administrator. |

Because restore of several or all schemas should be a relatively rare process the PowerShell script below is not scheduled. It is designed to be run, as required, from a PowerShell terminal. It provides feedback on progress for each restore operation. | Because restore of several or all schemas should be a relatively rare process the PowerShell script below is not scheduled. It is designed to be run, as required, from a PowerShell terminal. It provides feedback on progress for each restore operation. | ||

<div style="font-family: monospace; font-size: 13px" > | <div style="font-family: monospace; font-size: 13px" > | ||

Revision as of 13:44, 11 December 2022

Contents

Introduction

There are two sets of data that need to be backed up from the MDR system:

- The data in the databases

- The downloaded XML files

In both cases the data is first compressed on the server – as backup files for the databases, and as zip archives for the XML files. The compressed data is then also copied onto a machine that is outside the OVH infrastructure.

Backing up the databases

Each schema in each database is backed up within the same procedure. The PostgreSQL utility pgdump is used to construct a compressed folder for each schema, and name it using the source database and schema name, e.g. drks_ad.backup. Each folder contains a series of 'tarball' archive files, with extension .dat.gz. These archives must also be restored using pg_restore – they cannot be read directly. The alternative, of creating backup files as SQL statements, was rejected because it results in enormous files that are difficult to compress.

Because pgdump is a console program that takes parameters it is relatively easy to call it within a Powershell script, and then schedule the script. Essentially the script

- first determines the day of the week, to identify the destination folder for the backups,

- establishes 2 SQL statements, one that retrieves all the databases present on the PostgreSQL instance, and a second that brings back all the schemas of interest in those databases,

- deletes all files in the back up folder for that day,

- uses the Postgres CLI psql to call the SQL commands constructed above. Note the connection parameters are not included in the script - they are instead stored on the machine.

- for each schema, use pgdump to create a backup folder.

The Powershell script is below. It is heavily commented, and those comments should explain most of the parameters used for the various functions.

# set up variable pointing to backup location

# and transfer the execution context to the Postgres folder

# so that Postgres .exes (psql and pg_dump) can be found

$backup_folder = "C:\MDR_DBBackups\Postgres"

$postgres_folder = "C:\Program Files\PostgreSQL\12\bin\"

# Obtain day and folder name

$day = ((get-date).DayOfWeek).ToString().Substring(0,3)

$day_suffix = switch($day)

{

"Sun" {"_01_Sun"; Break}

"Mon" {"_02_Mon"; Break}

"Tue" {"_03_Tue"; Break}

"Wed" {"_04_Wed"; Break}

"Thu" {"_05_Thu"; Break}

"Fri" {"_06_Fri"; Break}

"Sat" {"_07_Sat"; Break}

}

$backup_folder = $backup_folder + $day_suffix

# sql required to bring back a list of databases in the cluster

# (works in any database)

$db_sql = "select datname from pg_database where datistemplate = false"

# sql required to bring back list of schemas of interest in each database

# i.e. those that include tables, excluding foreign tables or views,

# and that are not part of the system catalogues

$schema_sql = "select distinct s.schema_name from information_schema.schemata s inner join information_schema.tables t"

$schema_sql = $schema_sql + " on s.schema_name = t.table_schema where table_type <> 'FOREIGN' and table_type <> 'VIEW'"

$schema_sql = $schema_sql + " and schema_name <> 'pg_catalog' and schema_name <> 'information_schema'"

# get the dbs in the cluster and for each the schemas

# of interest. -qtAX used in each case simplifies the data returned

# from psql and makes it readable by powershell as a simple collection.

# -q = quiet (no messages) -t = tuples only (just values)

# -A = no align (no attempt at pretty arrangements) -X = no-psqlrc (do not read start-up files)

# -c is the command flag, followed by the sql statement

# calls to both psql and pg_dump use standard connection parameters

# but do not explicitly include password. For security, this is stored in pgpass.conf

# file, in %APPDATA%\postgresql\ for user, as per Postgres documentation

Set-Location $postgres_folder

# Need to first clear any pre-existing folders / files from the folder

Write-Host $backup_folder

Get-ChildItem $backup_folder -Recurse | Remove-Item -Recurse

$dblist = & psql -U postgres -d mdr -qtAX -c $db_sql

foreach($db in $dblist)

{

$schemalist = & psql -U postgres -d $db -qtAX -c $schema_sql

foreach($schema in $schemalist)

{

# Backup each schema using the 'Directory' option (-Fd)

# Provides a highly compressed folder. Using a plain sql backup

# resulted in huge files that were sometimes beyond the limit (2 GB)

# for compression within a Powershell script.

# Data must be restored using pg_restore.

# -c option means that tables are deleted (if they exist) and then recreated

# but note that database itself should exist on destination cluster.

# -n option makes it specific to the named schema

$file_name = $backup_folder + "\" + $db + "_" + $schema + ".backup"

Write-Host $file_name

& pg_dump -h localhost -U postgres -d $db -p 5432 -n $schema -Fd -c --if-exists -f $file_name

}

}

# Line below and Write Host in loop to be removed from

# production version. Retained here to assist development

# Read-Host -Prompt "Press Enter to exit"

The result of running the script is a set of dump folders in the relevant backup folder.

Zipping up the XML files

The XML files are stored in a set of folders, one for each source. Sources with very large numbers of files (e.g. ClinicalTrials.gov, PubMed) have the files distributed within sub-folders, usually by using their Id (in the source data, e.g. the NCT number). Sources with fewer files have no sub-folders, but may contain up to 50,000 files.

The folders are zipped by running a small utility written in C# called FileZipper. This is a console program that can take parameters. An -A flag will zip all files from all sources, whereas an -s flag will zip only the sources listed in a following string of source Ids (e.g. -s “100120, 100135”). A -J flag will zip the JSON files created by the MDR program.

For sources that have subfolders, the folders are split into 8 large groups, and each group is zipped into a single archive file. This is done to make it easier, if the need ever arose, to unzip the archives back to the original XML files. For source folders without subfolders all files are zipped into a single zip file. The zip files are placed into a folder, with sub-folders for each source. The location of the Zip folders is read from a JSON configuration file when the FileZipper utility begins

Copying the data from the server

The data is copied from the server by ‘pulling’ it with an installation of WinSCP on an external destination machine, using secure FTP (FTPS). The advantage of WinSCP over FileZilla Client is that the former has a CLI and so its operation can be scheduled, where as the latter can not (easily) be used in this way. In fact WinSCP also has a .Net assembly (WinSCPnet.dll) which provides a powerful mechanism for controlling the program, using either C# or any other .Net core based system such as PowerShell.

Setting up the WinSCP system is reasonably straightforward, though for the transfer to work it is also necessary to first install and set up a copy of FileZilla Server (distinct from FileZilla client) as an FTPS server on the source machine. FileZilla Server was used after initial attempts to use OpenSSH failed - it was not clear why. The FileZilla installation seems to set it up automatically as a service (start up type ‘Automatic’) and it launches a very basic GUI admin interface to the server. Further configuration of FileZilla Server requires the user to:

- Accept the default port for the admin GUI to connect.

- Open the machine’s firewall to the FileZilla exe, for both private and public networks.

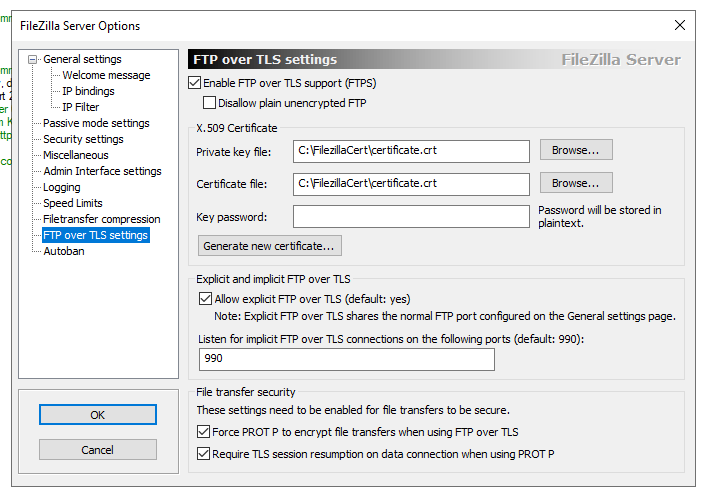

- Open up FileZilla Server settings and go to ‘FTP over TLS Settings’ – first ticking the box at the top to enable this.

- Select ‘Generate a Certificate’ (assuming there is not one to hand) which brings that dialog up.

- Select somewhere accessible on the machine to store the certificate and complete some basic details in the certificate dialog (not shown below) – really just two letters in the country box, such as 'UK' or 'FR', rather than a numeric code as some web sites say. It is not necessary to fill out any more of the form (leave street, city etc blank).

- Click ‘Generate a Certificate’ again and the system does exactly that, closing the certificate dialog and returning to the FTP/TLS dialog.

As figure 1 shows, the dialog gives details of what ports to use, depending on whether explicit or implicit TLS is to be used. Explicit uses the FTP general port (the default is 22) whilst implicit uses 990. On initial contact to the server remotely it gives an initial ‘Trust this server?’ message because of the locally generated certificate but afterwards seems happy.

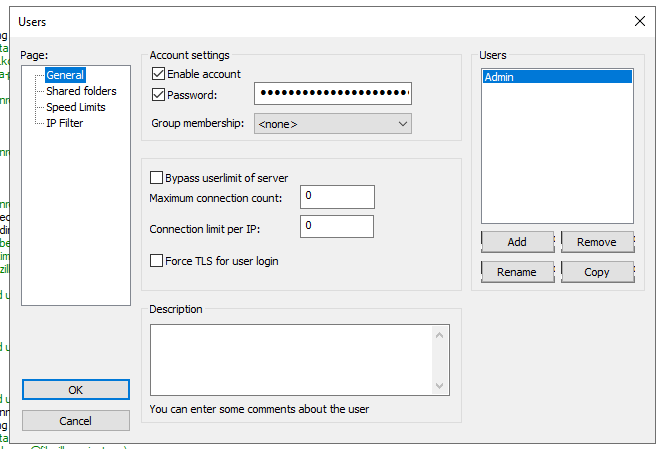

It is then necessary to click the Users button to set up a user account – here the standard Admin account used when logging on is used, and add the relevant password

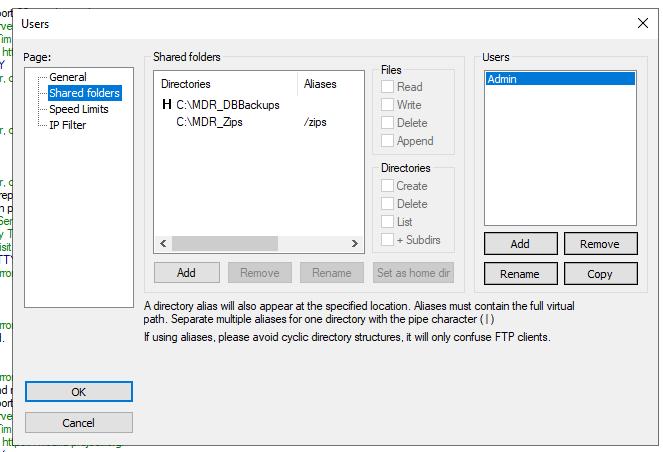

A folder should be made sharable for that user, here the backups folder. This becomes the root folder. Additional folders should be aliased to a 'pseudo-folder' below the initial root, which means that it is not necessary to share the whole of the source machine's C drive.

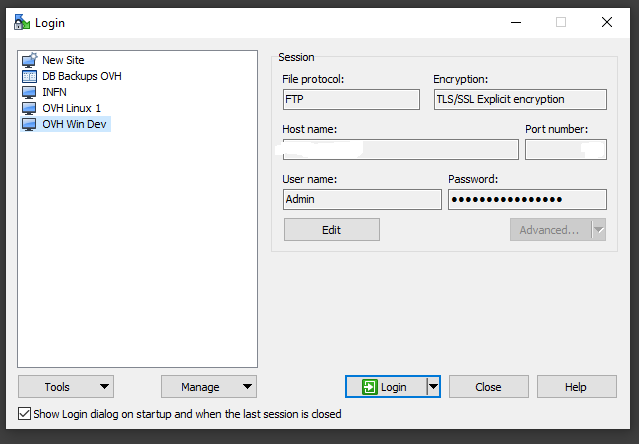

WinSCP should now be installed on the remote machine (which uses Windows 10). The UI can be used to configure a specific connection, completing the requested details. Obviously the protocol has to be FTP using explicit TLS, to match the Server FTPS setup.

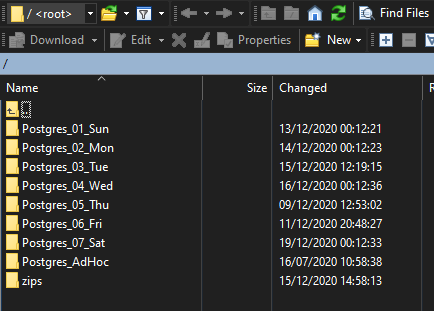

Once the connection is made by 'logging in' on the WinSCP client the remote folders appear in the WinSCP interface, as shown in figure 5.

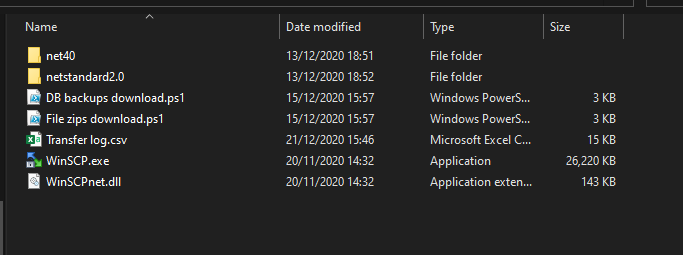

That establishes a connection but more work is required to configure WinSCP for automatic, scheduled operation. For this a script is required. The WinSCPnet.dll is a ".NET wrapper around WinSCP’s scripting interface that allows your code to connect to a remote machine and manipulate remote files over SFTP, FTP, WebDAV, ... ... from .NET languages, such as C# ... or from environments supporting .NET, such as PowerShell...". It is the obvious tool for this job. It should be downloaded and unzipped / located so as to be easily accessible to the scripts that call upon it - in this instance it was simply loaded into the same folder. Note that an additional copy of the WinSCP.exe is also part of the package.

Non functional files have been tidied away into the sub-folders

Note that the installation has 2 versions of the .dll file. One for PowerShell 6 or 7, that use .Net core, in the netstandard2.0 folder, and one for PowerShell 5 or lower, in the net40 folder - the files have the same name but slightly different sizes. A copy of the correct WinSCP.dll should be placed in the main location where it will be referenced (if not there already). The exe file is the same in both instances.

A second useful preparatory step is the download and installation of the PSFramework Module for PowerShell. This provides useful additional functionality, the most relevant in this instance being a relatively straightforward logging capability. The PSFramework is downloaded and installed as a PowerShell Module by going to the PowerShell Gallery and running: Install-Module -Name PSFramework.

The script for transferring the database backups is shown below. That for the zipped files is almost exactly the same though the remote source folders will obviously be different. It is based on examples within the WinSCP documentation, and uses the fact that operations within the WinSCP UI can be used to generate the corresponding .Net script in various languages - in this instance in PowerShell. The script:

- First sets up a named CSV file as the 'logging provider', meaning all logging messages go to this file.

- Gets the day of the week, and so derives the name of the source and destination folders.

- Deletes the current contents of the destination folder.

- Loads the WinSCPnet.dll wrapper so that WinSCP is accessible to PowerShell.

- Sets up a WinSCP session object. The details have been removed here, but they are easily obtained by connecting to the same server in the UI and then using the code generation feature of WinSCP to grab the equivalent code (WinSCP provides good documentation of this feature).

- Changes the default failure setting to log an error but continue to transfer other files.

- Transfers all contents of the source directory to the destination directory - with the impressively succinct GetFiles command

- Disconnects from the remote server

try

{

# Set up the logging provider as a log file

$paramSetPSFLoggingProvider = @{

Name = 'logfile'

InstanceName = 'Transferring DB backups'

FilePath = 'D:\MDR Scripts\Transfer log.csv'

Enabled = $true

}

Set-PSFLoggingProvider @paramSetPSFLoggingProvider

$module = 'Transferring DB backups'

Write-PSFMessage -Level Important -Message 'Started module.' -ModuleName $module -Tag 'Info'

# get the day abbreviation

$day = ((get-date).DayOfWeek).ToString().Substring(0,3)

$day_suffix = switch($day)

{

"Sun" {"01_Sun"; Break}

"Mon" {"02_Mon"; Break}

"Tue" {"03_Tue"; Break}

"Wed" {"04_Wed"; Break}

"Thu" {"05_Thu"; Break}

"Fri" {"06_Fri"; Break}

"Sat" {"07_Sat"; Break}

}

# Get folder names for this day

$remote_folder = "/Postgres_" + $day_suffix

$local_folder = "D:\MDR Backups\Postgres_" + $day_suffix

# delete current contents of local folder

Get-ChildItem $local_folder -Recurse | Remove-Item -Recurse

Write-PSFMessage -Level Important -Message 'Existing files deleted in local folder' -ModuleName $module -Tag 'Info'

# Load WinSCP .NET assembly

Add-Type -Path "D:\MDR Scripts\WinSCPnet.dll"

# Set up session options

$sessionOptions = New-Object WinSCP.SessionOptions -Property @{

Protocol = [WinSCP.Protocol]::Ftp

HostName = "XX.XX.XX.XX" ''(redacted)''

PortNumber = XX ''(redacted)''

UserName = "Admin"

Password = "XXXXXXXXXXXXX" ''(redacted)''

FtpSecure = [WinSCP.FtpSecure]::Explicit

TlsHostCertificateFingerprint = "aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa:aa" ''(redacted)''

}

$session = New-Object WinSCP.Session -ErrorAction Stop

# Connect

$session.Open($sessionOptions)

Write-PSFMessage -Level Important -Message 'Connection opened with remote server' -ModuleName $module -Tag 'Info'

try

{

$session.add_QueryReceived( {

Write-PSFMessage -Level Warning -Message 'Error: $($_.Message)' -Tag 'Failure' -ModuleName $module -ErrorRecord $_

$_.Continue()

} )

# Transfer files

$session.GetFiles($remote_folder, $local_folder).Check()

Write-PSFMessage -Level Important -Message 'Completed transfer of files.' -ModuleName $module -Tag 'Success'

}

finally

{

# Disconnect, clean up

$session.Dispose()

}

exit 0

}

catch

{

Write-PSFMessage -Level Warning -Message $Error[0] -Tag 'Failure' -ModuleName $module

exit 1

}

Scheduling back up activity

Because both backups and XML files are only scheduled to change in certain parts of the week they do not have to be backed up every day.

For the database data

- Backups are carried out at 00.05 on Wednesday and Saturday morning. This is just before and just after the databases are usually changed.

- The backup folders are transferred to an external machine at 04.00 on Wednesday and Saturday morning.

For the XML files

- The zipping of the files is carried out at 00.05 on Monday and Thursday morning. This is just before and just after the XML files are usually updated.

- The zip files are transferred to an external machine at 04.00 on Monday and Thursday morning.

Scheduling of the backups / zips on the server uses Windows Task Scheduler, scheduling PowerShell and FileZipper respectively. PowerShell is version 5.1 on the server, the default installation for Windows Server 2019. Scheduling the C# utility is straightforward, but for PowerShell to work properly with the task scheduler, after the job is set up initially using 'Create Basic Task', it has to be configured in a certain way:

- In the General tab, ensure 'Run whether user is logged in or not' is checked.

- In the General tab, ensure the 'Configure for' drop down indicates Windows Server 2019.

- In the Settings tag, for the 'if the task is already running, then the following rule applies' ensure that 'Stop the existing instance' is selected. This is more to ensure that the scheduled task is not blocked from any earlier error.

- In the Actions tag, ensure that the correct version of PowerShell is referenced. For v5.1 this is usually 'C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe'

- In the Actions tag, ensure that the parameters begin with the -no profile and -ExecutionPolicy Bypass flags, and the script reference is preceded by -File, e.g.

-noprofile -ExecutionPolicy Bypass -File "D:\MDR Scripts\DB backups.ps1"

For scheduling the transfer to the external machine, Powershell is used for both the database backups and the zip files. Again the job must be configured in a particular way. Because the external machine runs Windows 10 and uses the cross platform PowerShell 7, based on .Net core, (the scripts could therefore run on a Linux box) the configuration should be as described above, except that:

- In the General tab, ensure the 'Configure for' drop down indicates Windows 10.

- In the Actions tag, ensure that the correct version of PowerShell is referenced. For v7 this is usually 'C:\Program Files\PowerShell\7\pwsh.exe'.

Restoring the Database Data

Restoring the databases requires use of the pg_restore utility against each of the back-up folders created by the scheduled back-up. If only a single schema needs restoration, back to the source Postgres instance, then the easiest way to do that is via pgAdmin, by selecting the schema, right-clicking, selecting Restore and then completing the resulting restore dialog. The restore type will be Directory and the user should be 'postgres'.

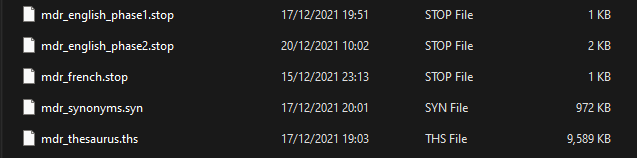

If several or all schemas need to be restored a PowerShell script is available, shown below. This scenario might arise, for example, when copying the databases to another Postgres installation, either on a completely different machine, or on the same machine as part of a Postgres upgrade process. As a preparatory step the folders to be restored, as generated by the backup process, need to be copied into an accessible folder on the destination machine if not there already. If the restore is on a different installation from the original, there are some additional files, dictionaries and related data that support text processing, that also need to be copied first. They are shown if figure 7 below, and are to be found in the C:\Program Files\PoastgreSQL\nn\share\tsearch_data\ folder, where nn is the PostgreSQL version number, e.g. 13 or 15.

If these files are not copied than attempting to restore the mdr.core schema, which makes use of text processing to set up fields of lexemes from study titles and topics (to aid searching), results in a large number of error messages and the restore for that schema is aborted. Three other preparatory steps are required for the restore script to run, if not already set up on the destination machine:

- The folder holding psql and other Postgres exes (e.g. pg_dump, pg_restore) should be in the PATH variable, for system environment variables (though Set-Location can be used in the script to get round this, by changing the PowerShell working directory to the relevant folder)

- A pgpass.conf file needs to be established in the user AppData\Roaming\postgresql\ folder (see notes in script). This allows the password to be supplied automatically. Each line in this text file must be of the form hostname:port:database:username:password, but a * may be used to provide a wild card, e.g. localhost:5432:*:postgres:password_string

- PowerShell security needs to be set up to allow trhe script to run. By default unsigned scripts will not run (and can fail silently or with a misleading error message). A typical command would be Set-ExecutionPolicy -ExecutionPolicy Undefined -Scope CurrentUser (or CurrentMachine). Such commands will require the PowerShell terminal to be run as an administrator.

Because restore of several or all schemas should be a relatively rare process the PowerShell script below is not scheduled. It is designed to be run, as required, from a PowerShell terminal. It provides feedback on progress for each restore operation.

# set up variable pointing to backup folders' location

# and transfer the execution context to the Postgres folder

# so that Postgres .exes (psql and pg_restore) can be found

Write-Host "Starting..."

$backup_folder = "C:\MDR_DBs"

$postgres_folder = "C:\Program Files\PostgreSQL\15\bin\"

# Need to get the list of folders in the backup directory

$folderlist = Get-ChildItem -Path $backup_folder -Directory | Select-Object -ExpandProperty Name

# Each folders name is made up of <database name>_<schema name>.backup

# e.g. lebctr_sd.backup, context_geonames.backup, mdr_core.backup

# Loop through the backup folders, and for each extract the db and schema names

$current_db = "**"

foreach($folder_name in $folderlist)

{

# remove the last 7 characters - '.markup'

# extract database name and schema name from each file

$db_schema = $folder_name.Substring(0, $folder_name.Length - 7)

$underscore_pos = $db_schema.IndexOf("_")

$db = $db_schema.Substring(0, $underscore_pos)

$schema = $db_schema.Substring($underscore_pos + 1, $db_schema.Length - $underscore_pos - 1)

# connect to postgres database by invoking psql CLI

# psql location, e.g. C:\Program Files\Postgres\15\bin\

# needs to be in path (system variables) for this to work

# Calls to both psql and pg_restore use standard connection parameters

# but do not explicitly include password. For security, this is stored in pgpass.conf

# file, in AppData\Roaming\postgresql\ for the user as per Postgres documentation.

# Note that the 'user' in this context is the PowerShell user - not the

# postgres user (which is normally the default 'postgres').

# For example the user is 'steve' on my local machine, 'admin' on a server, etc.

# The pgpass.conf File appears to be created automatically in the postgres user AppData, and

# therefore it and the parent folders can be copied, pasted and modified from there.

If ($db -ne "postgres")

# ignore the default postgres database completely, used for dropping

# and creating other databases, (a DB cannot drop itself) and for

# instance specific schedule data if pgAgent used - but has no data

{

If ($db -ne $current_db)

{

# recreate database

Write-Host " "

$db_sql1 = "DROP DATABASE IF EXISTS " + $db + " (FORCE);"

Write-Host $db_sql1

& psql -h localhost -d postgres -U postgres -p 5433 -w -c $db_sql1

$db_sql2 = "CREATE DATABASE " + $db + ";"

Write-Host $db_sql2

& psql -h localhost -d postgres -U postgres -p 5433 -w -c $db_sql2

# drop the default schema

$current_db = $db

$drop_schema_sql = "DROP SCHEMA public"

& psql -h localhost -d $current_db -U postgres -p 5433 -w -c $drop_schema_sql

Write-Host $current_db "recreated"

}

# add the necessary schemas

$schema_sql = "CREATE SCHEMA " + $schema

& psql -h localhost -d $current_db -U postgres -p 5433 -w -c $schema_sql

# use the pg_restore exe to reload the data

$srce_dir = $backup_folder + "\" + $folder_name

Write-Host $srce_dir

& pg_restore -h localhost -U postgres -p 5433 -w -d $current_db -F d -n $schema $srce_dir

Write-Host $schema "restored"

}

}

# Line below to be removed from

# production version. Retained here to assist development

Read-Host -Prompt "Press Enter to exit"